Questions or Comments? Please send me a tweet or shoot me an emailAfter creating the Mixed Reality trailers for Fantastic Contraption and Job Simulator I wanted to dive a little bit deeper into virtual cinematography by filming an entire trailer inside of VR rather than mixing live action and virtual reality.

The idea of filming in-game avatars rather than the actual physical player on a green screen is something we did in a smaller scope back on the Mixed Reality Fantastic Contraption trailer. I wanted to expand on that idea and see what we could do filming a whole trailer this way with different focal lengths and camera moves like you would in a traditional live action shoot.

Mixed Reality trailers are awesome, but creating high quality mixed reality videos are incredibly time consuming, expensive, and logistically complex from both a technical and creative standpoint. Filming an avatar in third person like you would in a normal live action shoot has many advantages and in many cases may be a better overall solution for showcasing your VR game/project.

Why First Person VR footage (generally) sucks

There's a reason movies aren't shot in first person. Our brains react emotionally to seeing footage of actors performing in front of us in a way that's presented cinematically. We don't react emotionally on nearly the same level when we're literally seeing through another person's eyes. There's so much nuance that's lost about the performer when we can't see their body or they fit into their world. Virtual Reality is no different, but unfortunately first person footage is generally what is used to explain and promote VR games. This footage falls flat emotionally for many technical and creative reasons.

By default, first person footage is what is output from the current gen of VR hardware/software so most people just stop there and use that footage since it's easy to generate. Additionally, most raw video output for first person VR footage is un-smoothed which makes it hard to watch. Your brain and eyes naturally cancel out the hundreds of little micro adjustments and movements your head is making so your head appears to be moving smoothly to you. But take a look at most first person VR footage on Youtube, and it's a shaky mess. Your head moves a lot more than you realize, and the 2D footage feels unfocused and hard to watch.

If you absolutely need to use first person footage, make sure you create a custom HMD camera that takes the output of the HMD and smooths it so that it's easier to watch. We did this for the Job Simulator trailer it it was very successful.

Ideally, you need to see the player inside of their virtual environment to make the biggest visual impact and connect emotionally to the viewer. Whether that's through creating a mixed reality trailer or filming an in-game avatar depends on the needs and budget of the project.

Filming VR from a third person perspective allows you to create dynamic and interesting looking shots that capture the essence of what it feels like to be in the game. Space Pirate Trainer is a perfect example. What the game looks like from a first person perspective is not what it feels like to actually play the game. Take a look at the different examples below. The third person footage feels kinetic, cinematic, dynamic and you emotionally connect to it. The first person footage feels cluttered, confusing, and visually uninteresting. Filming a player in third person through mixed reality, or filming an in-game avatar solves many of these communication problems.

third person camera

This footage is more kinetic, cinematic and dynamic. The action is framed in a cinematic way, the camera follows the player's reaction to the incoming droid and the camera can anticipate the movement over to the large droid creating an interesting and compelling shot.

first Person Camera

It's hard to tell what's going on in this shot. The gun and shield obscure many of the main aspects of it. It's not cinematic, it's not kinetic, and doesn't feel interesting or exciting to watch.

Here's an example from the Fantastic Contraption Oculus Touch trailer. Which shot feels more compelling and dynamic? Which shot gives you a better sense of what is happening in the game?

THIRD PERSON CAMERA

This shot feels dynamic and interesting to watch. The camera starts out wide, then pans in with precise timing on the player grabbing a wheel from Neko to illustrate how she acts as the toolbox in game. We then pan past her and focus in on the contraption the player is building following the motion of his hand.

FIRST PERSON CAMERA

This shot feels flat, boring and above all kind of vomit inducing because of the angle of the players head. Head tilt inside of the HMD feels completely natural to the player, but the resulting 2D footage feels wrong. Neko also gets lost in the green grass, and it's not clear what the player is grabbing from.

Here's another example from Space Pirate Trainer. This game is all about moving around in the environment to shoot/duck/dodge incomming bullets being fired at you from all angles. It's basically impossible to convey how that works through first person footage since you have no context for how the player is moving within their environment.

third PERSON CAMERA

This footage clearly conveys what's happening in-game. The player is being shot at by a swarm of droids, and he's dodging out of the way in slow motion.

first PERSON CAMERA

It's very difficult to tell what the player is physically doing in this shot. He's dodging the bullets by walking to the right in his VR play space, but that's not being communicated visually very well. All it looks like is the droids are shooting to the left and missing the player.

Why Film an in-game avatar instead of filming mixed reality

Mixed reality trailers are awesome and communicate what it's like to be in VR. But trying to create a professional looking mixed reality trailer requires a lot of money, time and resources. Though Owlchemy Labs Mixed reality tech should bring the complexity down once their stuff makes into more people's hands.

Filming an in-game avatar has many advantages:

It's more cost effective: All you really need is a wired/wireless third vive controller, a gimbal stabilizer (or even a cheap steady cam like the one I used pictured here), and some developer time to create an appropriately styled in-game avatar for the game on top of the extra time you need to film the trailer in VR. You eliminate all the overhead of having to buy/rent/create/light a green-screen setup and the crew necessary to operate this scale of operation and deal with the post production headaches to put them together.

To create the Space Pirate Trainer trailer I just needed someone to play the game while I filmed them.

You have more time to experiment: When you're on set with 5-10 people waiting for decisions - time is money. When you can shoot a trailer with less people over more days, you have more time to experiment and try different things. We shot for 3 days each for the Fantastic Contraption and Space Pirate Trainer Trailer to get all the shots we needed.

Change direction on the fly

When you're shooting on set there's very little room for experimentation since you're under the gun in terms of time and what needs to get accomplished during the day. Since we didn't have the time pressure associated with that, we were able to take breaks to check our footage and see if what we were shooting was working - and if not - adapt on the fly and change course.

It's amazing that what feels correct while in the HMD playing the game looks wrong when you're filming from a third person perspective. Blocking is important and where the player is positioned in the world can completely change the look and readability of the shot. Small details like the angle of your wrist can have a large influence on how natural the Avatar looks. Some movements might jank up the IK so knowing how and where to place your body is important - so checking footage so both the person playing and person filming are on the same page really helps with this constant iteration.

Actor performance/fatigue: This is something that most people don't think about, but it's tiring to play VR. Especially a game like Space Pirate Trainer. Getting consistent/exciting performances from your player is very difficult when you're dealing with a game with so many random elements and it's exhausting after about 30-45 minutes of play. Not being under the time constraints and pressure of being on-set allows more time to breathe, take breaks and feel more relaxed about the whole process.

It's incredibly surprising how much emotion comes through the avatar from just those three data points (the head and two hands). If your player is feeling tired or just not emotionally into what they're doing, it's amazing how obvious that is when you're filming them. When you're filming a live action person on set for a mixed reality trailer, that exhaustion is compounded about 10 fold and requires professional actors to keep up a level of performance for hours at a time.

Your character fits perfectly in the in-game world: It would have been extremely time consuming and expensive to create a costume similar to the in-game avatar in Space Pirate Trainer to make a player feel like they're part of the game's world. If we were to have shot this trailer with mixed reality, It would have felt out of place to have a player in their jeans/t-shirt facing off against all of these futuristic robots. We would have wanted to dress them up in costume, but even then, we wouldn't have been able to get close to what they were more easily able to achieve in-game since compositing a live player into a game's environment will never look as good as if they're part of the game world to begin with.

Variety is key: Seeing your game from one perspective (the player's) gets boring fast. To create a trailer or video that's compelling to watch you need to have multiple shots from different angles to give the viewer a sense of what they're looking at and how what they're seeing fits into the overall world of the game. That's basically impossible if you're limited to first person footage from the player's perspective. For a game like Space Pirate Trainer, the action is so fast and intense, that seeing it from different perspectives is the only way to properly visually communicate what is going on.

Creating the Museum of other realities trailer

The MOR trailer was the most complex avatar based trailer i’ve ever made. There were quite a few hurdles to overcome and a lot of custom tweaks for specific pieces of artwork in the museum. The MOR is an online ‘multiplayer’ experience, so we wanted to find a way to illustrate that in the trailer too.

The way I generally shoot avatar based trailers is with my friend Vince performing in VR while I film him with a virtual camera controlled by a third VIVE controller. But in this case, we wanted to shoot groups of people together, so this just wasn’t possible. We had to shoot everything live, with people in the museum but film them all remotely. We did this by streaming the location of the virtual camera to everyone in the MOR so they could see where the camera was while they were performing. I would film the avatars like I normally do, but there was no one physically present in my space. This solution worked really well, and I can’t wait to try it with some other multiplayer VR games!

We needed a ton of custom tools for this trailer - more so than any i’ve done in the past. There was simple things like toggling off the museum text on the walls, toggling off the names floating above the avatars, and hiding the UI elements on the floor to make the shots look cleaner.

For shots like teleporting into the ‘painting’, we needed to add custom controls to how the skybox would fade in around the player/camera. When you’re in VR, you simply ‘teleport’ into the new position, and the skybox pops in when you’re in the new location. But that pop looks really bad when you’re seeing it from a third person perspective, so we had to add options to time how that faded in.

Portals in the MOR allow you to travel between ‘dimensions’ and step into a whole new piece of artwork. Illustrating this mechanic with a 3rd person camera was technically challenging because we had to alter the way that portals behaved specifically for this camera. We needed a way to trigger the fading of the rainbow texture on the portal when the player is in front of it, since they normally open automatically when you’re within a few feet of any portal.

Another challenge with the portals was making sure the external camera could actually travel between ‘dimensions’ regardless of whether or not the local player was also traveling through the portal. Even after figuring all this out, there was still some visual ‘hiccups’ when one player travels through the portals (like the hands disappear for a few frames), but they’re relatively minor and don’t detract from the overall shot.

In addition to being able to control the 3rd person camera with a VIVE controller, we also added the ability to move it around with an xbox controller. We added other camera options like an orbit cam, that would always look at the player, and orbit around them at a set distance and speed.

One additional option was to lock the Yaw rotation of the camera so the horizon is always level. This, along with standard camera smoothing, is crucial for capturing professional looking footage, and also eliminates the need to attach the VIVE controller to any sort of stabilizer or gimbal.

Of all the different challenges this trailer presented, I think the toughest one was trying to capture good performances of large groups of people when they’re not physically present in the room. Getting the right timing on shots like the ones below was a combination of trial and error, making a lot of different takes and a ton of luck! I would do my best to count down to certain actions, but since there’s always going to be a bit of a delay from me counting down to an action and the person performing it, we were constantly adjusting blocking and timing to get the shot.

Creating the Space Channel 5 VR trailer

Space Channel 5 VR was a bit of a dream! The game is visually amazing and really evokes that 90’s Dreamcast feel while still feeling modern, so I knew filming it in VR would be a blast!

Since the game is very linear and only has a few sections we came up with a few systems to make things easier to shoot. The developer added in buttons for each section of the game, so we could jump to later sections immediately. This way, we didn’t have to play through the whole game every time we wanted to shoot something at the end of the experience.

One of the specific additions we made early on was adding a “auto-play” mode. This basically made the two avatar players in-game ‘play’ the game automatically, mirroring Ulala’s moves so we didn’t actually need someone performing or playing the game in VR. The HMD sat on the floor, and we were able to concentrate strictly on capturing a pre-existing animated performance, which allowed us to focus strictly on creating interesting camera moves.

In addition to the standard Vive controller/X-Box camera control setups we’ve used on past projects, the developer added in a way that we could set the rotation point of the camera to be offset from the physical location of the camera. This allowed us to move the camera to a specific spot, then use the xbox controller to move it further away, while the rotation point (or camera’s interest) would still be set to that initial starting location. This allowed us to create some really cool sweeping camera moves similar to that of a large scale techno-crane.

I tried to create some cool shots with this on my own, but the best results came when my friend Vince and I worked together - One of us controlling the camera rotation and the other moving it in space with the x-box controls. We were able to get some really awesome shots, working together this way - you can see a few examples below.

This feature actually was the result of a bug and mis-communication of my original xbox control scheme with the developer. When I tried the first build, I thought it was totally broken, but after experimenting with it a bit, I loved the camera moves we were able to produce, so we ended up turning the bug into one of the best features!

One other wrinkle was that we needed an english and fully Japanese version of the trailer! Creating new title cards wasn’t that big of an issue, but swapping out all of Ulala’s voice from the english gameplay to the Japanese VO was a bit of work. We eliminated as many of the existing in-game VO and SFX as possible and added most of them again in post. I love the way the Japanese version turned out!

Creating the SUPERHYPERCUBE VIVE trailer

Super Hyper Cube presented a very interesting challenge. How do you film a game thats core gameplay is looking around cubes from a relatively fixed position and trying to fit them into a hole that's coming towards you?

It might seem like it makes more sense to create a trailer for this game with smoothed first person HMD footage, but the's two problems with this approach:

The problem with first person footage from SHC is that most of the time, the hole in the wall is completely obscured by the cube in front of the player. The viewer never gets a chance to understand the core gameplay. All they see is a cube flying through a wall. This problem is compounded when watching the previous trailers on your phone. The hole in the wall is often not visible at all.

Moving the camera to different locations and changing the focal length of the lens (instead of being locked to the HMD) allows us the freedom to get both the cube and hole in the shot from a variety of angles. Placing the camera behind the HMD allows the viewer to see the whole in the wall, see the cube pass through, then see the trail left behind the player which emphasizes that the shape of the cube matched the shape of the hole in the wall.

The other issue is the first person footage starts to get repetitive very quickly. SHC is filled with amazing looking backgrounds as you progress through the game. The scale of the cubes also get VERY large which doesn’t come across in first person footage at all. We wanted to give the viewer a sense of what it was like to be inside this neon filled world, and what it’s like to play the game.

Like with Space Pirate Trainer, everything the player sees is from the exact same location. In this case, you’re locked behind a large cube, peering around it’s edges. We move the camera around in this environment to give the viewer a sense of how the player fits in it, and get a wider variety of shots that would keep a viewer’s interest for the whole trailer.

From a technical stand point, creating the handheld camera and tools needed to shoot this trailer was a challenge due to all the screen space effects that were being applied to the HMD camera in-game. SHC is filled with glows, bloom, grain, god rays etc, all of which vary dependent on the location of the player’s head. Now all those effects had to be replicated so that they also worked from the new camera floating in the environment. This was not a trivial task and took months of part time work to get things looking right.

In addition to that, cheats needed to be added so that we could progress to the later levels of the game without getting the cube in the correct positions, level skips, and auto charging of the different power-ups so we could trigger them whenever we wanted. We also added the ability to toggle off the UI when we shot reverse angles so that the text of the UI wouldn't appear reversed in those shots.

The scale and look of the HMD also went through a few iterations. With no real world scale to reference, the HMD model was scaled up quite a bit from it’s actual size so that it would read better on screen when paired against some of the larger cubes.

One other decision we made shortly before shooting the trailer was to hide the controller models entirely. When you’re playing, you can see the two VIVE controllers in-game, but we removed them from the trailer since they looked more distracting than anything and they don’t interact with the cubes or game in any discernible way.

One of the more custom controls we added specifically for this trailer was an “orbit cube” mode where we could use the x-box controller to rotate around the cube from various positions to get some interesting angles on the action. This is featured pretty prominently from around 48s on in the trailer and I think it’s adds a variety of interesting angles on the gameplay we’ve never seen before.

Creating the Rick and Morty Virtual Rick-ality Trailer

The Rick and Morty Virtual Rick-ality trailer presented a number of unique challenges that we didn’t encounter while creating the Fantastic Contraption or Space Pirate Trainer trailers. The main issue was the linear nature and sheer number of different environments and locations in the game, and coming up with a way to access them quickly so we could capture as many unique shots as possible.

Owlchemy Labs created a custom build of the game with its own UI that allowed me to jump to all the major points in the game without having to travel through the game linearly every time. I can’t stress enough how important this was since we often did over a dozen takes of individual shots to get them right. Progressing through the game normally to get one take of a certain shot would not have been practical.

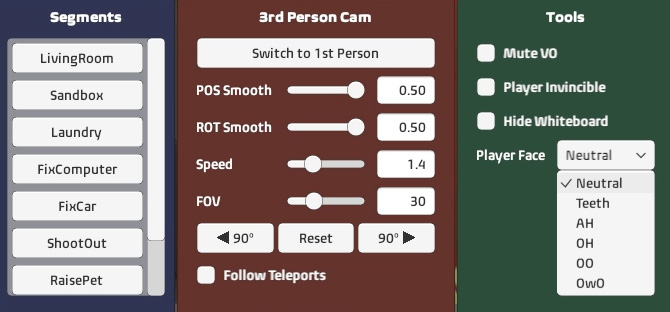

We also had custom tools to turn off the in-game voice over so we could capture raw gameplay sound effects without Rick and Morty talking over them. Other small tweaks like having a toggle to remove the large whiteboard in the garage for certain shots and making the player invincible in the alien shoot out section were extremely helpful.

We also added the ability to change Morty’s mouth blend shape so we could have different facial expressions that matched the action in the scene. Owlchemy implemented this specifically for the trailer since having the same static mouth throughout the video would have felt a bit emotionally flat and weird.

There’s a lot of custom scripted animation events in Rick and Morty VR (such as the garage transformation seen above), and we wanted to show off a few of them in the trailer in an interesting way.

It’s important to get a sense of where the player is in the virtual environment, and placing the camera at a different point than the player can lead to some unexpectedly amazing shots. (I love the wide shot of Morty on the Satellite). We did this by offsetting the handheld camera in space with an Xbox 360 controller to get a more cinematic point of view on the action. Moving the camera around the environment and finding positions to get cool shots is extremely fun and rewarding.

One side effect of doing this kind of thing is you can run into situations where you're looking at geometry or parts of a level that were never designed to be seen from this perspective. For example, in the Alien Shootout scene Owlchemy had to go into the build and extend the skybox down past the horizon and tweak the placement of certain pieces of geometry so we didn't see any clipped objects in the scene.

We used many of the same techniques from the Contraption and SPT trailers such as a smoothed handheld camera with adjustable FOV, and the ability to toggle between first person and third person cameras if need be.

Rendering the position of the handheld camera inside the HMD was another vital component we used so the person playing/acting could see the position of the camera man in the room. Understanding where the camera is and how shots are being framed allows the actor to know how/when/where to move, being better able to suggest ideas for camera placement etc. This was invaluable for 2-way communication while setting up shots and also makes the player feel more at ease so they don’t run the risk of bumping into the cameraman while playing. If we needed the player to look just off camera they knew exactly where it was.

Since R&M is a much more graphically intense game, we were not able to render both the smoothed first person and handheld cameras simultaneously. We had to toggle in-between them, but we only used the first person camera for one shot (try and pick it out!). All gameplay was captured with Bandicam at 1080p 60fps on the same computer running the game.

To film the trailer, I attached a third Vive controller to my Pilotfly H2 gimbal using my iPhone as a remote viewfinder. We shot for 2.5 days to get all the things we needed for a total of 3h 29m worth of footage! Here's a time-lapse of the shoot in my basement!

One shot I wish we could have nailed was having the camera follow Rick through a portal from one environment into another. Unfortunately due to the way the game renders and the way the scenes are assembled in Unity this just wasn’t technically possible. This GIF is about as close as we could get before the camera freaked out and started warping to the environment inside the portal.

You might be wondering with Owlchemy Labs being the developer of R&M why we didn't use their new Mixed Reality tech to make this trailer. There's a few reasons for this, the main one being that if we did shoot this live action, it would have meant that we would have had to shoot the entire trailer TWICE - once for Oculus, and again for the VIVE, since you can't shoot someone wearing two VR headsets at once :D

The costs associated with shooting on a greenscreen set with a dedicated camera crew for 2.5 days per version would have been astronomical, and we wouldn't have had the time to adjust course while shooting. The way we shot this and the other avatar based VR trailers is we will shoot for one full day, then i'll go through the footage the next day, make a quick first edit to see where we're at. Then on the next day of shooting we have an idea where we'll need to pick up shots and re-shoot things to make them even better. After the 2nd day of shooting i'll spend some more time making a better cut, then as we're close to wrapping things up, we'll do another day to get any pick-up shots we need.

This kind of schedule would not be possible if we were shooting it live, since when you're working with large crews, it's easer to work consecutive days rather than a schedule that's broken up like we did.

The other reason we didn't use mixed reality in this trailer is that narratively it makes more sense for the player to be a real "Morty Clone" since that's what he's referred to in-game and in the trailer. The whole trailer is built around the fact that it exists within the Rick and Morty universe, and having a real live person in there in place of the character would have felt very out of place within the narrative we were going for.

Sense of Scale in Fantastic Contraption

One of the things we wanted to get across was the new "seated scale" that's part of the Oculus touch version of Fantastic Contraption. Seeing the game though first person can be a bit deceiving and getting a real sense for how large or small the contraptions are isn't always obvious. Filming avatars in third person was a simple way to give the viewer a real sense of how large they were in the context of the rest of the world.

THIRD PERSON CAMERA

This shot clearly puts the size of the contraption in context. You can see how small it is compared to the player and the camera angle focuses your attention at the top of the shot where the contraption is travelling. The emotion of the player is also very clearly conveyed when the contraption hits the goal.

FIRST PERSON CAMERA

Until the controller comes into view at the end, it's almost impossible to know how large that contraption truly is in this shot. It could be very large and far away for all we know. The head tilt and motion also makes this shot hard to watch and it's impossible to read the emotion of the player based even though it's the exact same shot.

Shooting the PSVR and Oculus versions at the same time

One small wrinkle that we had to deal with for Fantastic Contraption specifically is we wanted to use this trailer for both the upcoming PSVR version and the Oculus. So, how do you shoot two trailers at once when the in-game controls are specific to each platform? Lindsay Jorgensen came up with an incredible solution: We would split the screen into quads and place render only Oculus controllers in first person and 3rd person quads and PSVR controllers in the other two quads.

This worked amazingly well, and about 80% of the shots in the trailer just required a simple footage swap to change over to the PSVR version. We had to remove any references to room scale shots in the PSVR version of the trailer so the only major section that needed to be completely re-captured is where the Pegasus is building the first contraption. There were a few other minor changes to other shots, but overall this solution worked incredibly well!

We obviously shot both trailers with the VIVE and it would not have been possible to do this kind of thing any other way. We needed room scale tracking for both the camera man and the person playing the game. There is currently no possible way to do this kind of thing with the Oculus or PSVR on their own.

Remote monitoring solution for virtual cameras

One shortcoming to filming in VR is you don't have a screen on your virtual camera which makes it hard to compose shots while you're walking around. The way we handled this for the Contraption and Space Pirate Trainer trailers was super hacked together - We literally had a DLSR pointing at one of the four quads on my monitor, and had an HDMI feed from my DSLR to a TV at the far side of the room. It worked well enough, but I wanted something right on the steady-cam so the montor was right near my hand.

I looked at a few solutions, but came across a super low latency VNC application called jsmpegVNC. It streams the contents of your screen to a browser that can be loaded up on any mobile device. The only wrinkle is that I only wanted to stream a cropped 720p section of my screen, not the whole screen. So I got in touch with the developer and he added this functionally in.

So, I stuck my iPhone 6s to my steady-cam with funtak and here's the result. The stream is running in vanilla Safari and when the phone is mounted horizontally, the 720p stream is stretched to fill the screen. This is a super low latency/low cpu overhead way of getting a wireless remote monitor on any device. I can't wait to use this on the next project.

Experimenting with VR Body IK and Animations today. #VR #madewithunity pic.twitter.com/UmmCGiMysN

— Dirk Van Welden (@quarkcannon) September 26, 2016

THE SPACE PIRATE CHARACTER RIG

The team at i-illusions created an in-game avatar and used an IK rig to drive the character (including the feet) from the position of the HMD and controllers. This worked out so well that when I was editing the footage, parts of it felt like they were 100% motion captured.

The biggest issue with the IK rig at the moment is the legs/feet don't feel completely natural 100% of the time. Once someone releases a fully lighthouse tracked body tracking system with points for the feet, knees, waist, and elbows in addition to the hands and head (along with the appropriate Unity/Unreal plugin to interpret the data) it’ll be even easier to implement in-game and the quality of motion tracking will hopefully be almost as good as something like ILM’s iMocap system that costs more than my house per shot. My feeling is it’s only a matter of time before that becomes a standard thing for animating VR avatars.

Until that becomes a reality, the solution we came up with is to mostly shot the avatar from the waist up to avoid the problems below the waist and only show the full body in wide shots.

CAMERA CONTROLS

Next up we needed some camera controls. We use a wireless third VIVE controller and it acts as our in-game camera. I took that camera and placed it on my ghetto steady cam and we were off to the races. There’s a slider in-game to change the field of view so we could shoot with wide or telephoto lenses, and smoothing controls for both position and rotation. We also had options to turn off the score/lives indicators, the floor bounds, and the in-game ad stations so there wasn’t any distracting elements on screen. The screen was split into two quads on top so we had a smoothed first person view too, but in the end, we never ended up using any first person footage.

Droid camera

Dirk also added the ability to attach a camera to one of the in-game droids. With the right amount of smoothing, this allowed us to get some really cool sweeping pans of the whole environment for 'free' with very little intervention on our part.

Xbox Controller Camera

We also had the ability to move around the third person camera with a regular XBOX controller. This allowed us to place the camera in places we couldn't physically move and get some super cool cinematic wide shots of the player within the environment.

Experimenting and getting cool shots

This video shows how we shot the over the shoulder sequence and how we could feel free to experiment with some different ideas. We wanted to get a “FPS” like shot of the weapon swap, but I was having trouble filming my friend Vince actually doing it since he was swinging his arm everywhere and keeping the gun framed properly was almost impossible at such a long focal length.

So Vince came up with the idea of just grabbing the steady cam with his other arm and holding it to his body so it would be locked to his position. Once he had it in his hand, I just offset the virtual camera to be at the right position and angle, and boom, there’s your awesome tracked over the shoulder shot!

Mixed Reality vs In-Game Avatars

I’m really excited by the prospect of being able to do this kind of virtual cinematography in VR. This technique isn’t new - James Cameron did a lot of virtual cinematography on Avatar and this technique was expanded on and used to great extent on The Jungle Book, but the setups they used are in the hundreds of thousands of dollars and infinitely more complex to operate. Now we can do basically the same thing for the cost of a VIVE, another controller, and a bit of development work. It’s incredible that we can basically film a live virtual action sequence in my basement! The next step will be getting more than one virtual camera in the room so we can have a few people filming at once!

Mixed reality is an amazing technique and tool as well. But I think every project needs to weigh the options and decide which really works best for their game. Take something like Rec Room - The entire game is cartoonish so placing a live action person inside of that environment would feel out of place next to all the other in-game avatars. But something like Google Earth has no such abstraction. Placing a real person in that world (even though those MR shots are faked) makes complete sense.

Just like normal game trailers, every game's needs are different and there's no one size fits all solution for every project. Think about what works best for your game/project, and what will create the most engaging, entertaining, and compelling result.

Good luck! :D

Questions or Comments? Please send me a tweet or shoot me an email